In this tutorial, we will show you how to integrate and remotely control the Trossen Robotics' WidowX 250 robotic arm.

In this tutorial, we will show you how to integrate and remotely control the Trossen Robotics' WidowX 250 robotic arm.

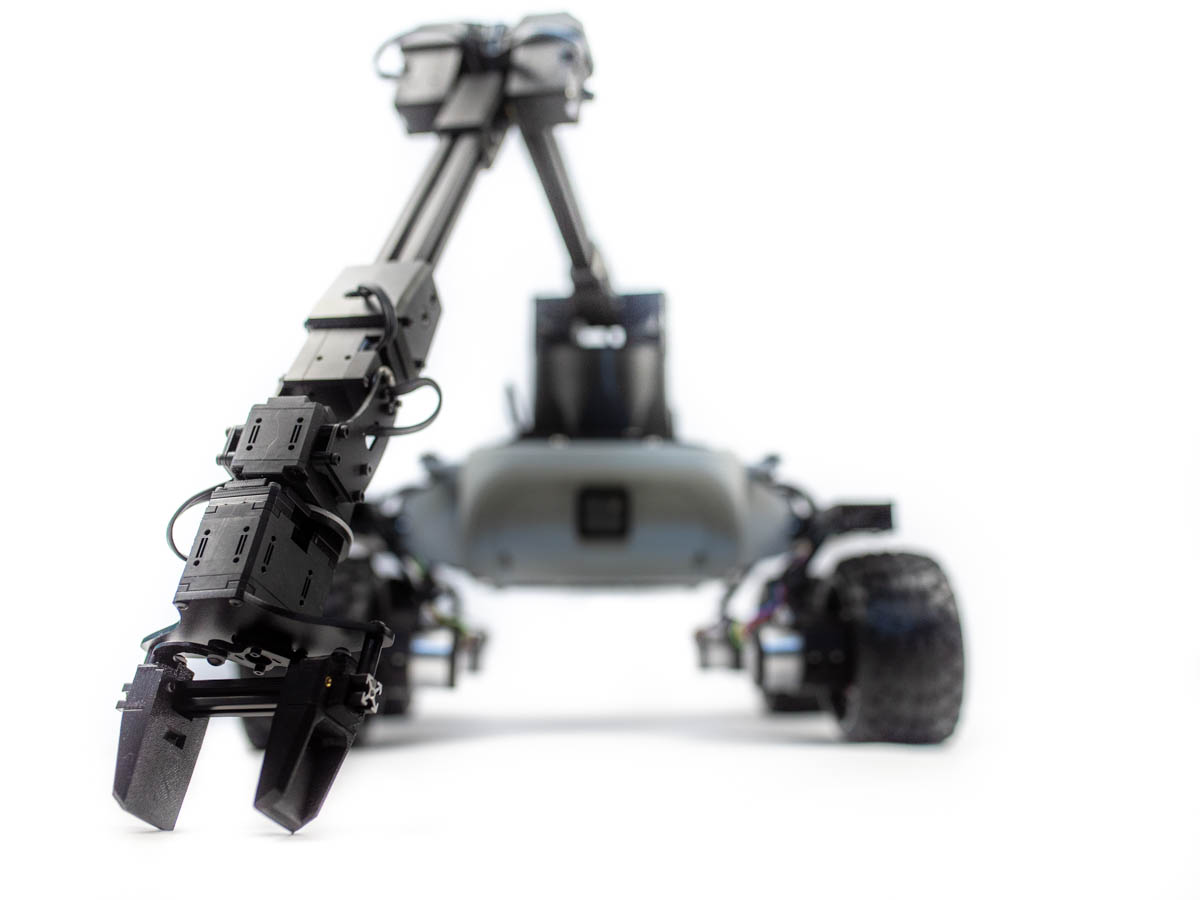

With 6 degrees of freedom and reach of 650mm WidowX 250 robotic arm is the biggest, and the most capable, robot arm we have ever tried to stick onto a Leo Rover.

After finishing the tutorial you'll be able to control the arm with a joystick and Python API, visualize the arms model and plan its moving trajectory with ROS MoveIt.

The mounting of the arm is particularly easy. If you have bought the arm with the modified support plate designed for our robot, you can use screws and nuts to connect the arm to the rover's mounting plate.

If you have the original support plate, you can get the model for 3D printing here (addons section):

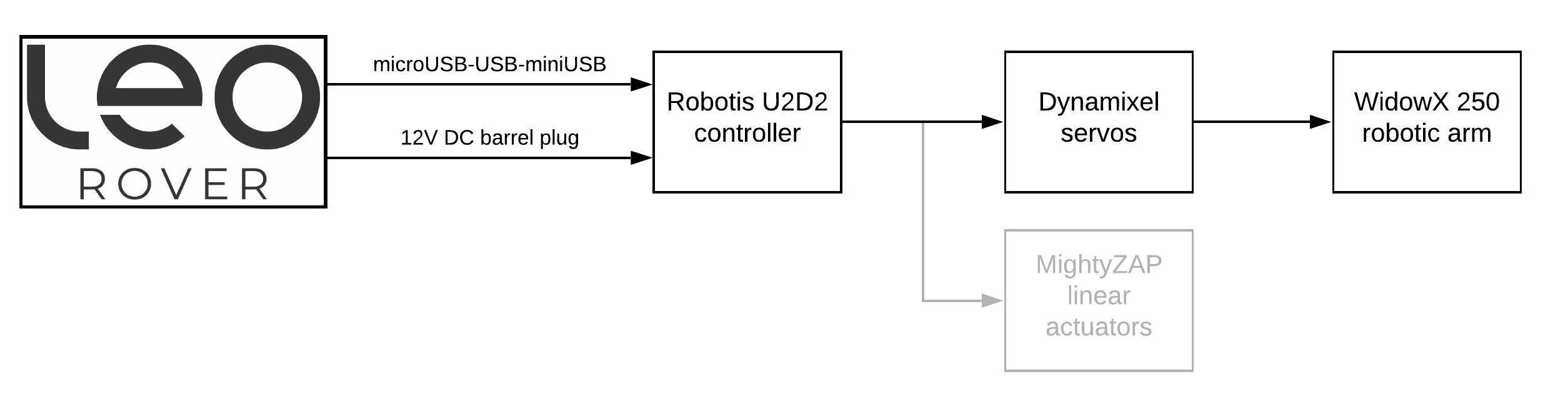

Stick the Dynamixel cable coming out of the base of the arm into the power distribution board.

Connect U2D2 and the power distribution board with the short Dynamixel cable.

Connect the U2D2 to the rover using an USB cable

The last step is to stick connect the barrel jack cable to the battery power supply ( a powerbox might be useful here) and plug into the other end into power distribution board.

Integrating the arm with the system

We need to make sure the U2D2 device is available at a fixed path on rover's system. To do this, you can add a rule to udev. Open a new .rules file with nano:

and paste the following rule:

To save the file, type Ctrl+O and Enter to confirm. Type Ctrl+X to exit nano.

For the rule to take effect, reboot the system or just type:

The device should now be available under /dev/ttyDXL path. You can check it by typing:

To integrate the arm, you will need to build some additional ROS packages. Start by creating a local catkin workspace, if you don't have one yet:

The package sources for the arm are available on Github at the interbotix_ros_arms repository. Clone the repository to your source space:

On the rover, you will only need the driver node for the arm (interbotix_sdk package) and the URDF description (interbotix_descriptions package). To speed up the building process, remove the unwanted packages:

Now, use rosdep to install any dependent packages:

and build the workspace:

Once the packages have been built, you can edit the environmental setup file to point to your result space. Open the file in nano:

Comment out the first line by adding # sign and add the source command for your workspace. The first 2 lines should look essentially like this:

Now, to add the arm's driver to the rover's launch file, open the robot.launch file:

and paste these lines somewhere between the <launch> tags:

You can also edit the robot's URDF file to connect the arm's base link to the rover's model. To do this, open the robot.urdf.xacro file:

and paste these lines somewhere between the <robot> tags:

That's it! On the next boot, the arm driver node will start together with all the other nodes. You can manually restart the running nodes, by typing:

Now that you have the driver running, you should see the new ROS topics and services under the /wx250 namespace. For a full description of the ROS API, visit the interbotix_sdk README page. You can test some of the features with the rostopic and rosservice command-line tools:

Retrieve the information about the arm:

Publish position command to the elbow joint:

Turn off the torque on all joints:

The interbotix_ros_arms repository contains some packages that will let you control the arm in different ways. To use them on your computer, you will need to have ROS installed:

and properly configured to communicate with the nodes running on the rover. For this, you can visit Connecting other computer to ROS network section of the ROS Development tutorial:

First, install some prerequisites:

and create a catkin workspace:

Clone the interbotix_ros_arms and leo_description repositories into the source space:

Install dependencies using the rosdep tool:

and build the workspace:

Now, source the devel space to make the new packages visible in your shell environment:

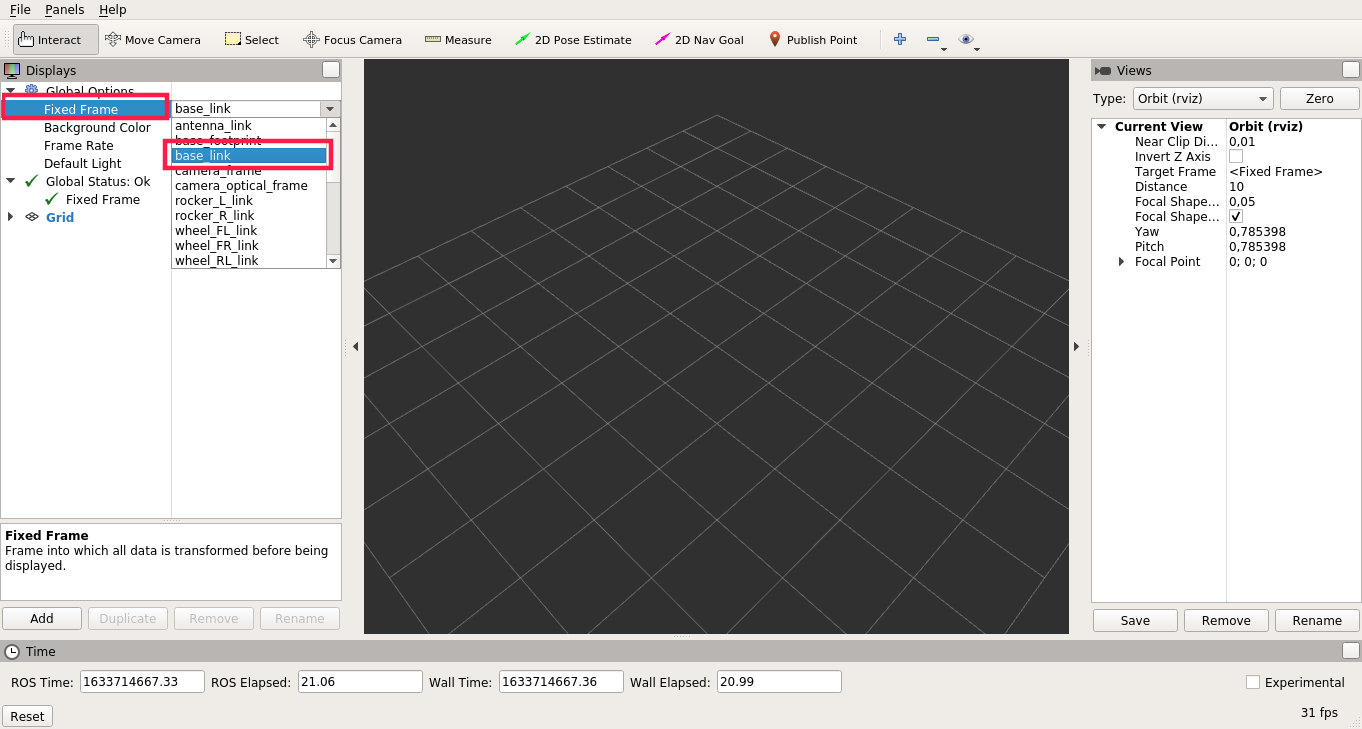

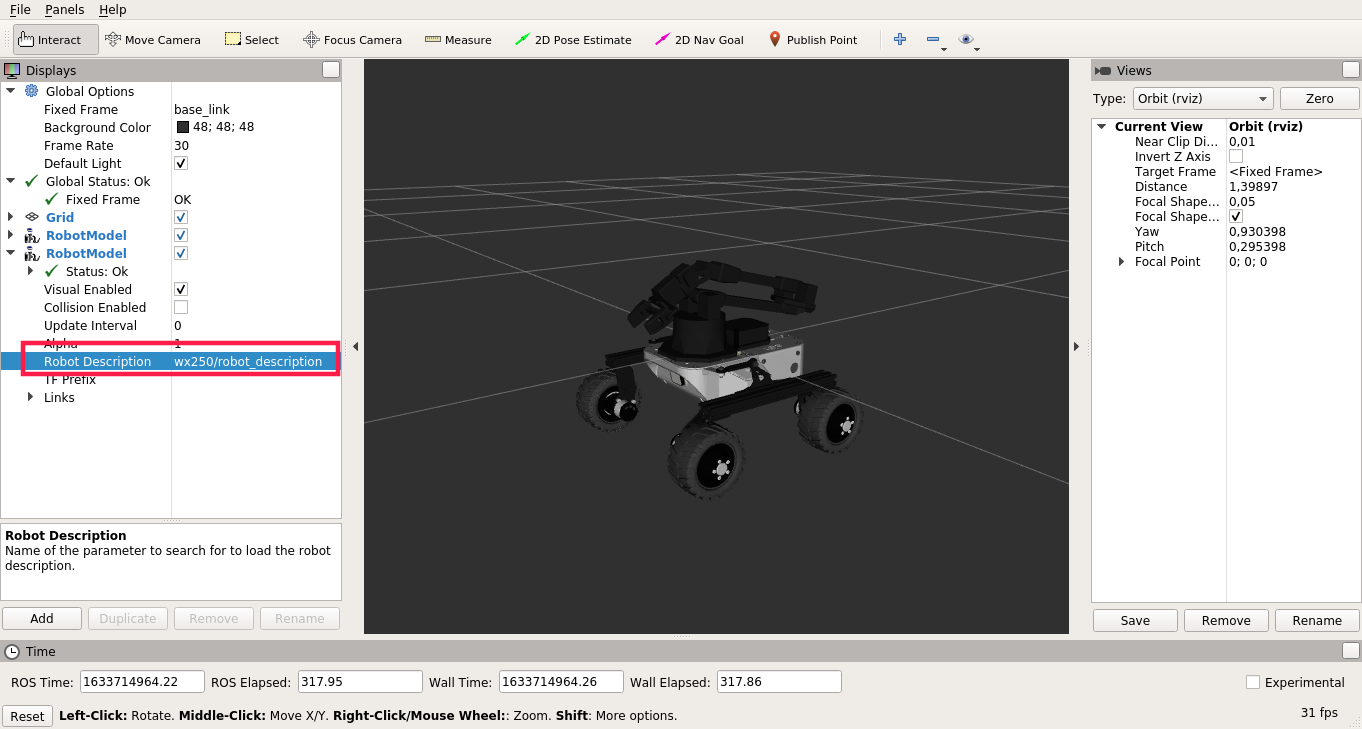

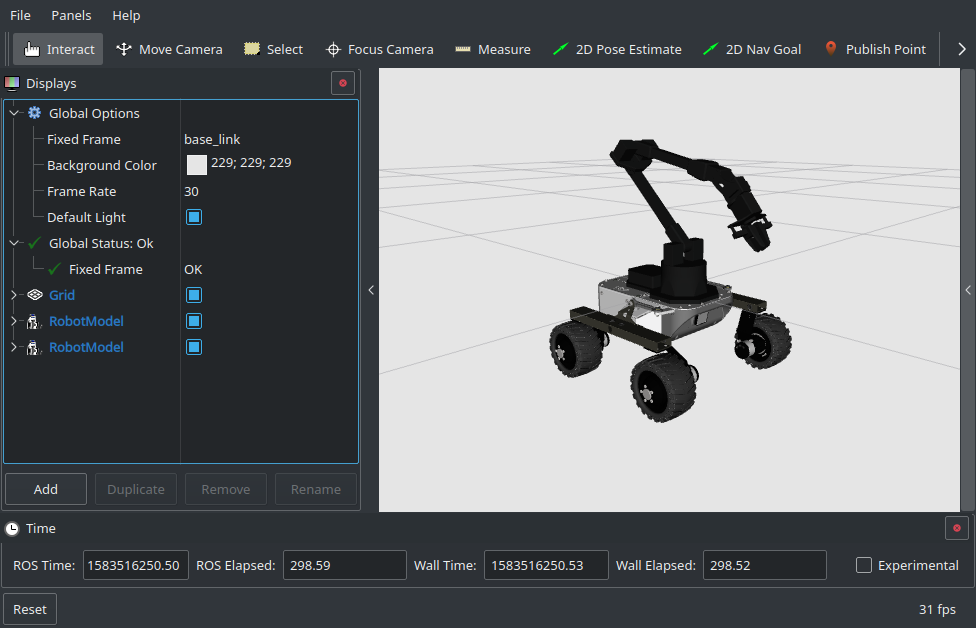

The effect should look similar to this:

MoveIt motion planning framework will allow us to plan and execute a collision-free trajectory to the destination pose of the end-effector. To use it, first make sure you have the use_moveit parameter for the arm driver set to true:

On your computer, type:

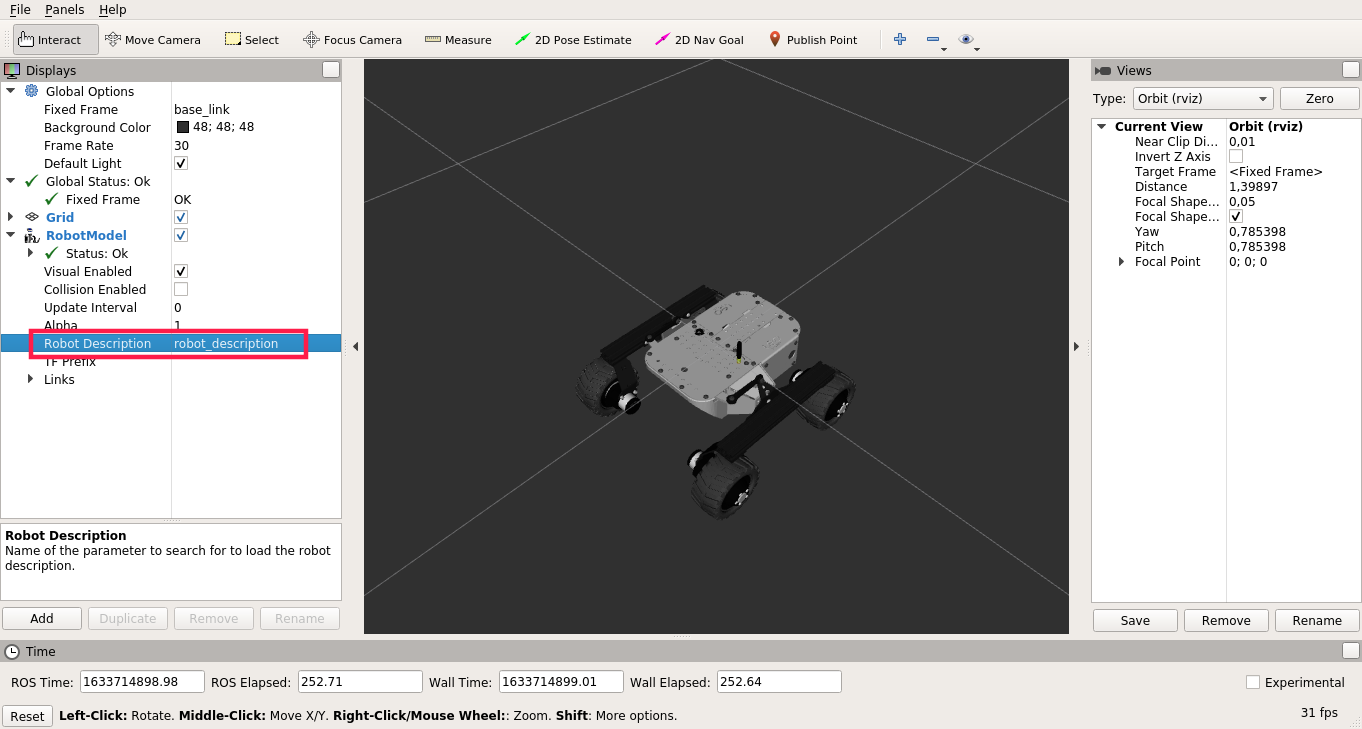

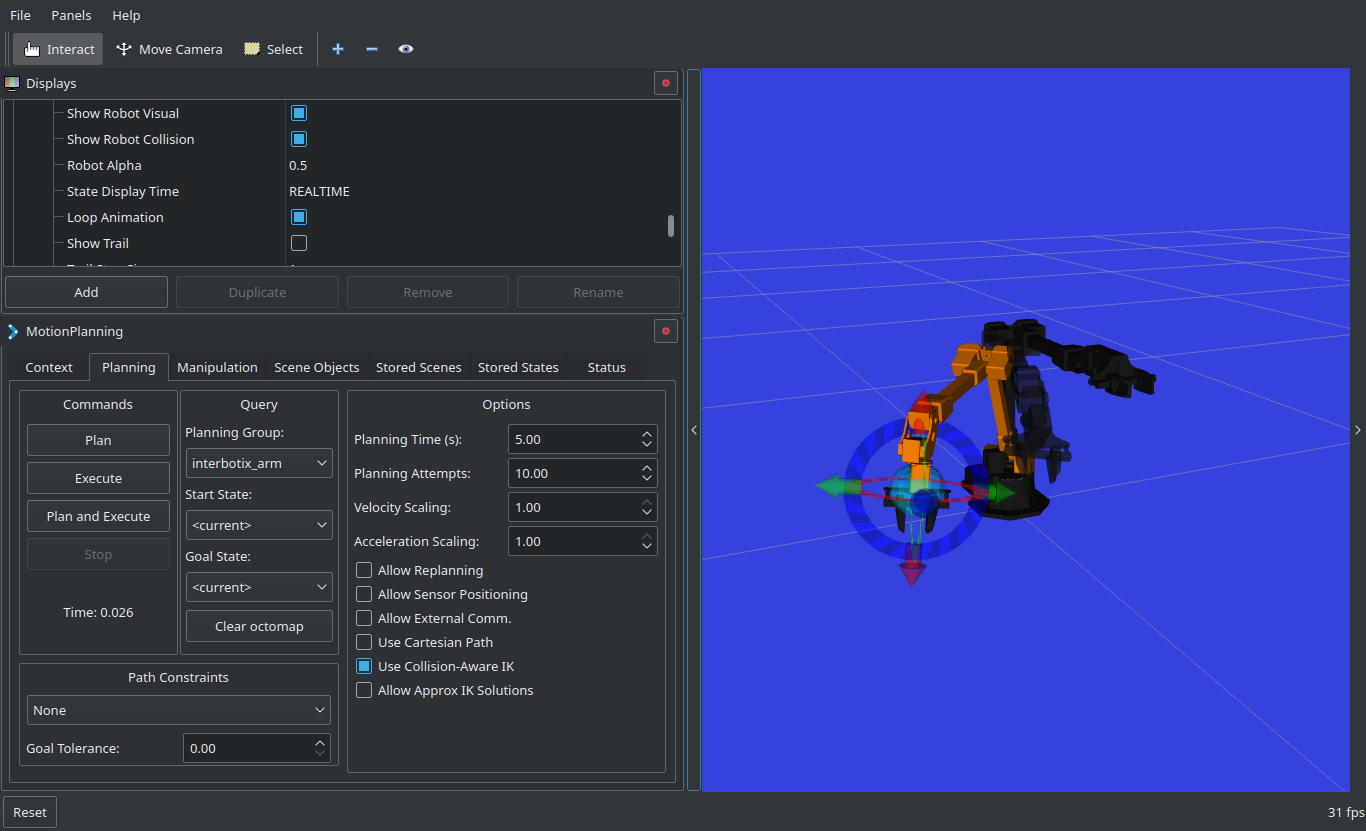

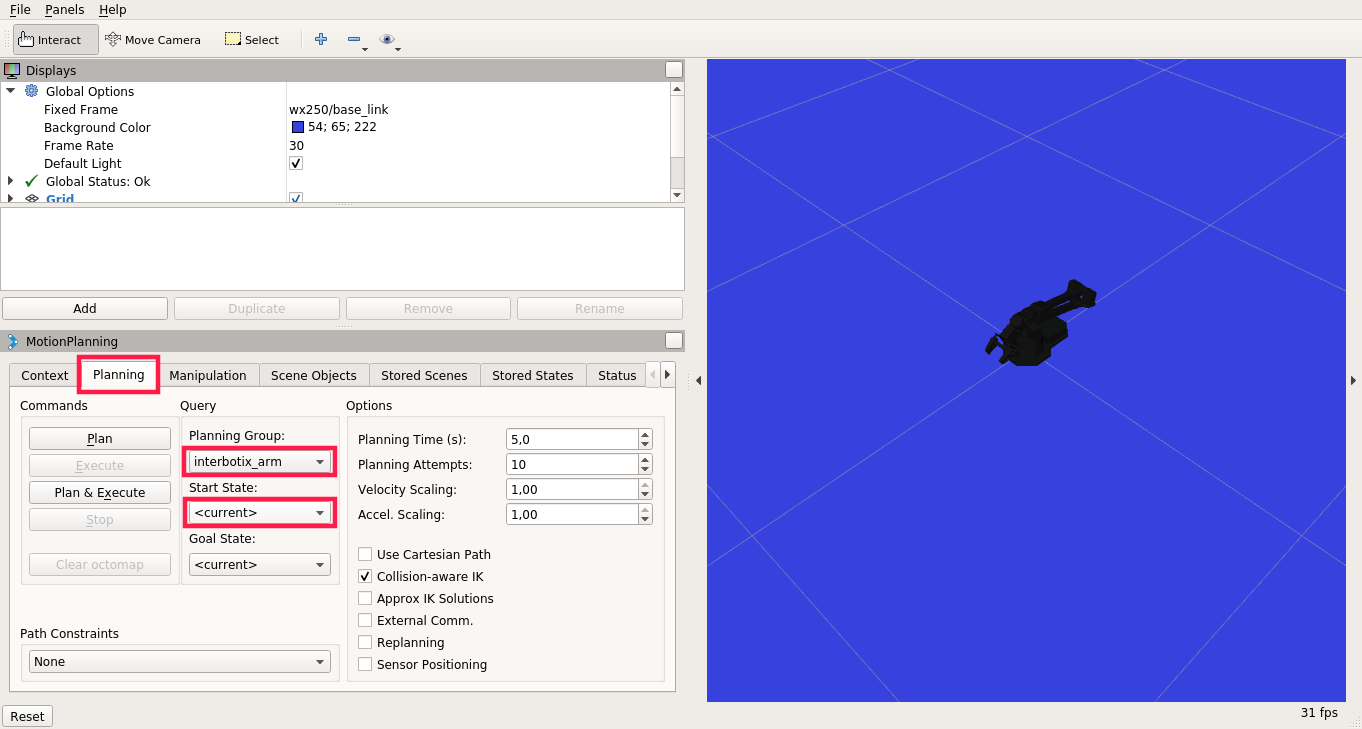

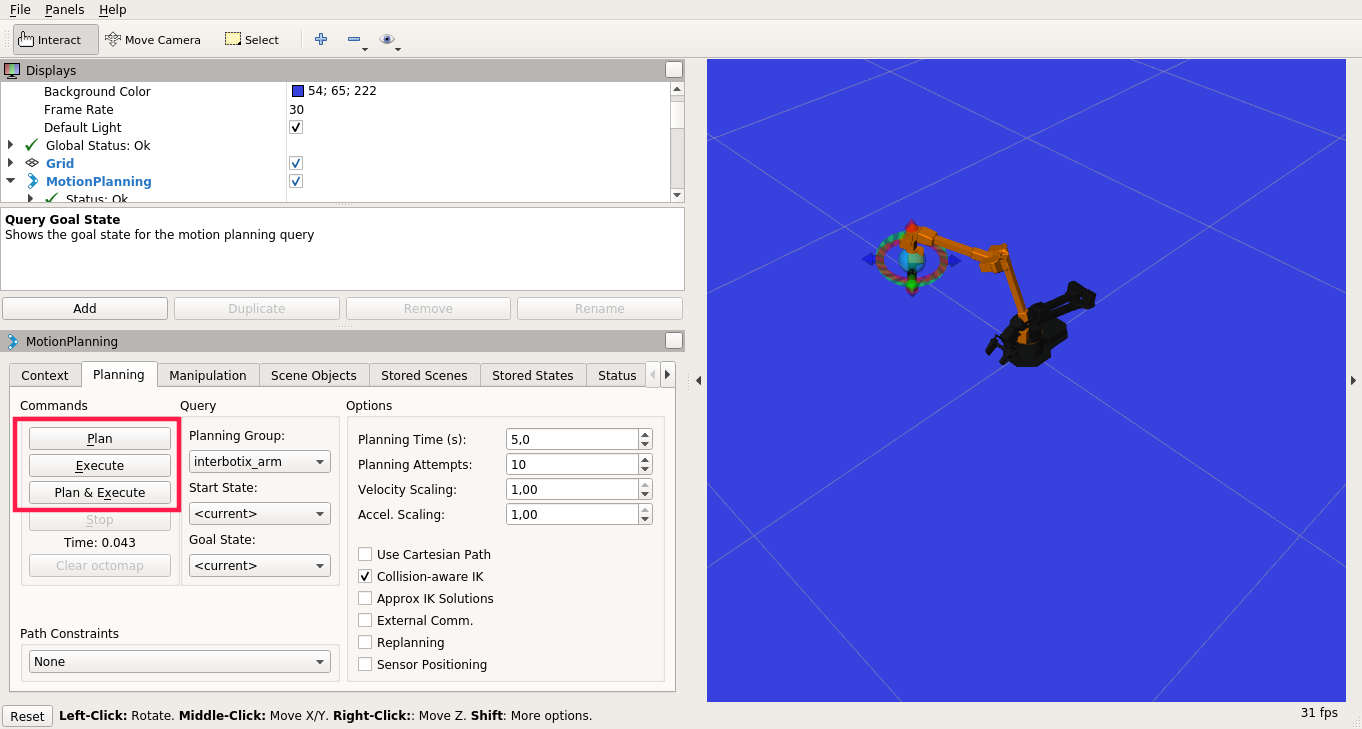

The MoveIt GUI should appear:

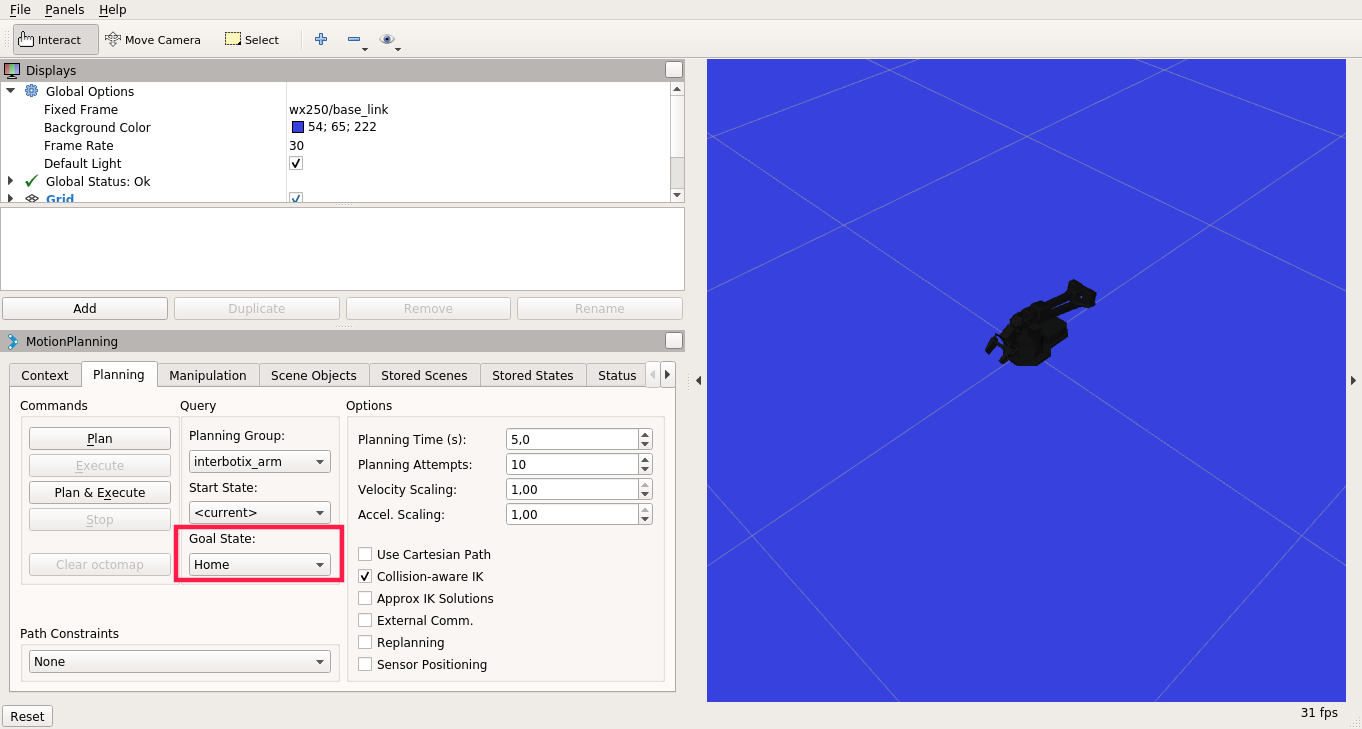

On the MotionPlanning panel, click on the Planning tab, choose interbotix_arm for the Planning Group and <current> for the Start State.

There are some predefined poses which you can choose for the Goal State, such as home, sleep or upright.

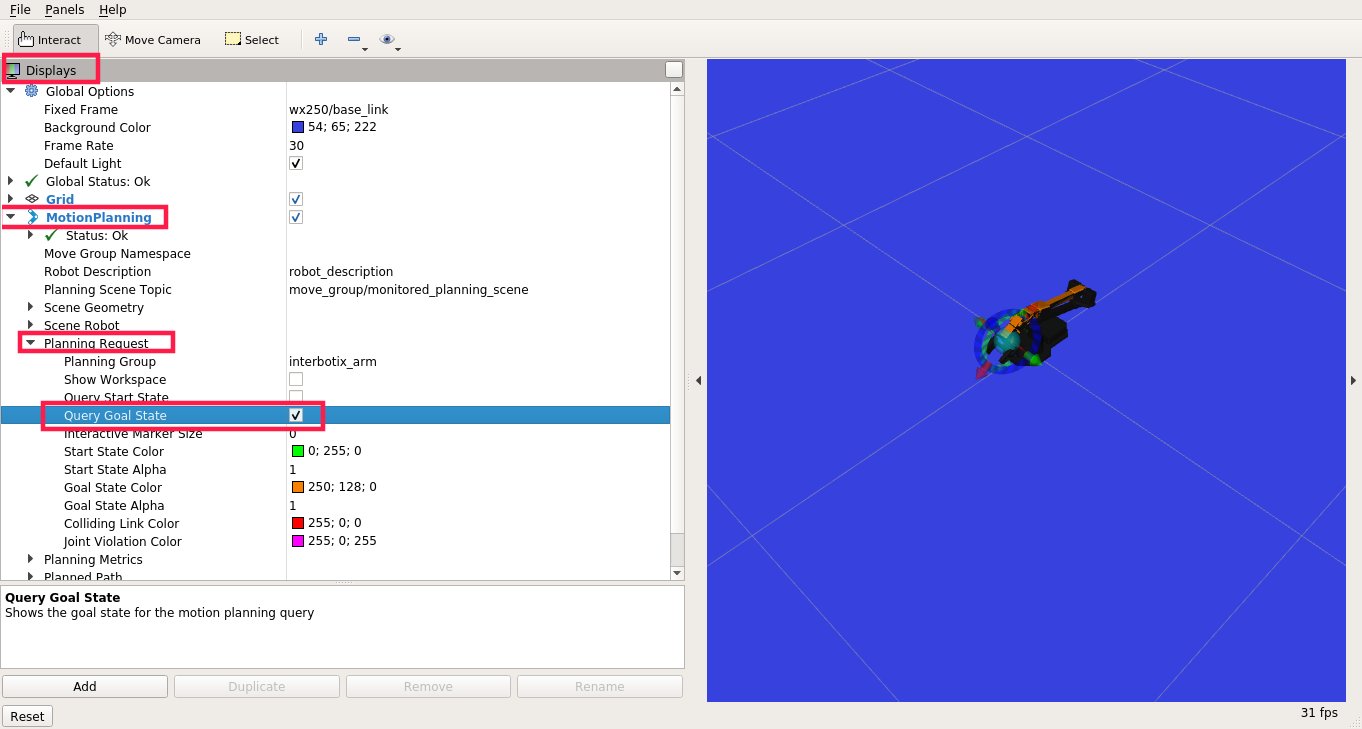

To set the pose manually, navigate to the DIsplays panel -> MotionPlanning - > Planning Request and check Query Goal State. You should now be able to manually set the end-effector pose for the goal state.

When the goal state is set, click on the Plan button to plan the trajectory (the simulated trajectory visualization should appear) and Execute to send the trajectory to the driver.

If you want to use the MoveIt capabilities in a Python script or a C++ program, please look at the interbotix_moveit_interface example.

The interbotix_joy_control example package provides the capability to control the movement of the arm (utilizing inverse kinematics) with a PS3, PS4 or an Xbox 360 joystick.

To use the package with the arm connected to your rover:

Aside from the driver, the interbotix_sdk package also provides a Python API for manipulating the arm. It is designed to mainly work with the position mode for the arm, pwm mode for the gripper and the Time-Based-Profile. For a start, you can set the same parameters for the driver as in the previous example.

There are some example scripts that demonstrate the use of the API at the interbotix_examples/python_demos directory.

The bartender.py demo performs some pick, pour and place operations. To run it, first open the file in a text editor and search for this line:

Change wx250s to wx250 and then type on the terminal:

If everything went right, you should see the arm in action.

You can check the other files in the directory for more examples. To view the available functions in the API and their documentation, take a look at the robot_manipulation.py file.

If you found this tutorial interesting, make sure to check out other tutorials we provide on our Knowledge Base site.