A line follower example for stock Leo Rover

In this example, we will show you how to run a line follower on the Leo Rover mobile robot.

After completing this tutorial, your rover should be able to navigate a two-lined track by itself. You will also be able to gather and train neural network model for this task on your own data. Here's an example of our rover driving on the designated track:

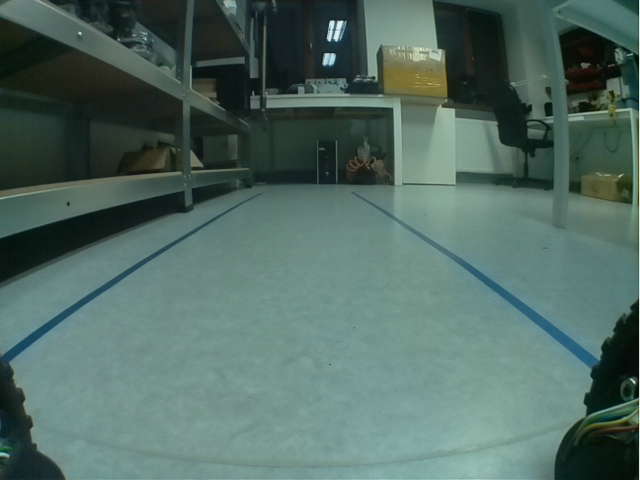

As this is one of our examples for stock Leo Rover, you don't have to do any mechanical stuff regarding the rover. The only "mechanical" thing you need to do is to make a two-lined track with insulating tape. Below, there's an example of our track that we used for training the neural network model – image taken from the rover. Try to end up with something like this:

Our neural network model was converted to TensorFlow Lite, so you need to install it on your rover too:

You can install the package using apt by typing on the rover

Then you just need to source the ros workspace

You can also get all needed software from our leo_examples github repository. You need to clone it on the rover in the ros workspace directory (if there's no such a directory, then, first go through the ROS Development tutorial):

Now, you need to install all the dependencies for the downloaded packages:

Then, you need to source the directory and build the packages:

Our approach for this task was to get the specified color from the image (color of the tape), and train the neural network on such a mask.

So, the first thing you need to do is to get the color mask values. We have prepared ROS node for this task. To run it, type in the terminal on the rover:

Then, on your computer, you need to go to your ROS workspace, source it and export ROS environment variables to be able to run ROS nodes on your computer with master being on the rover:

Now, with the color_mask node running on the rover, run rqt on your computer to be able to visualize the color mask and choose the values:

Now, run in rqt:

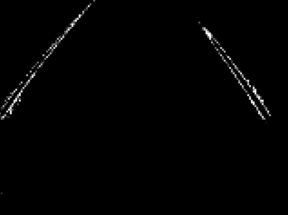

In Image View, from the topic drop down choose /color_mask topic – this is the live view of the color mask sampled from the rover's view with current values for the color mask.

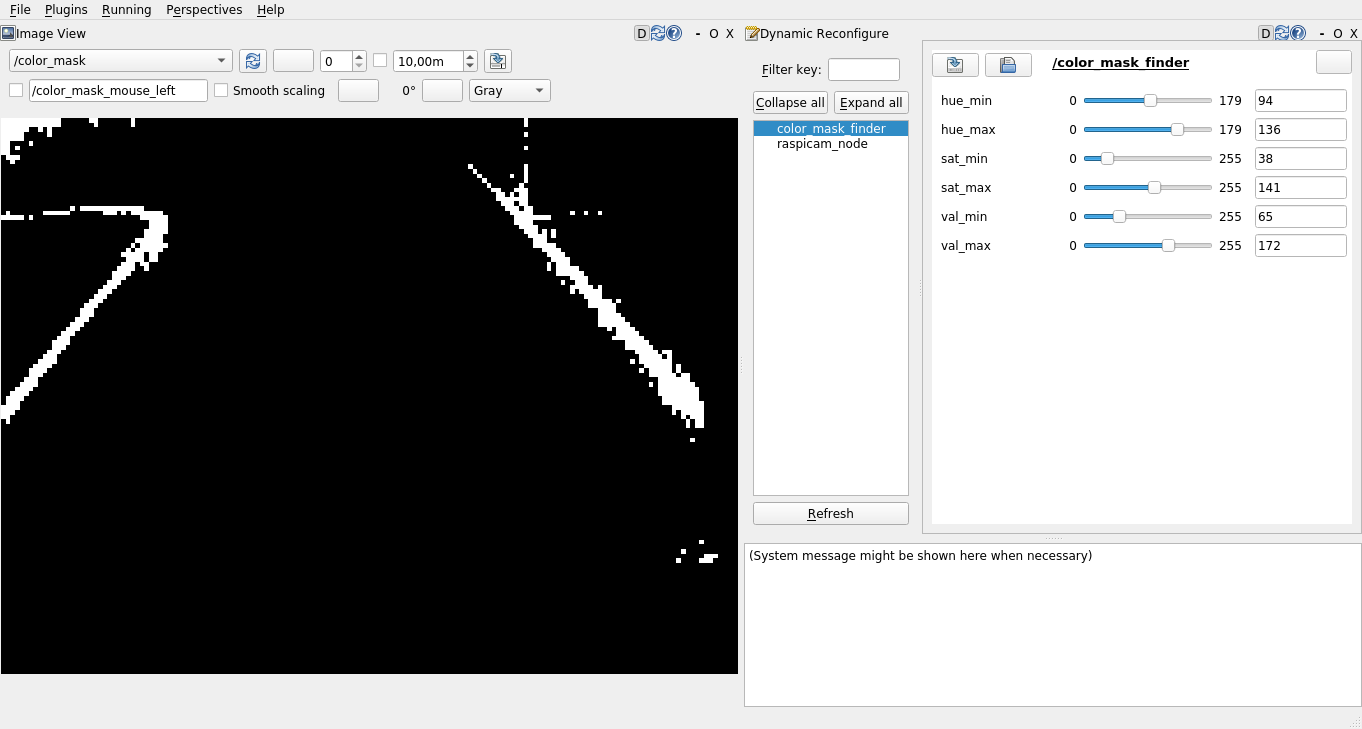

In Dynamic Reconfigure, choose color_mask_finder. You'll see something like this:

The sliders are for choosing HSV min and max values (2 sliders per hue, saturation and value). You can adjust those sliders to change the color mask. If you want to see what colors are currently in the mask, switch the topic in the image view to /catched_colors/compressed.

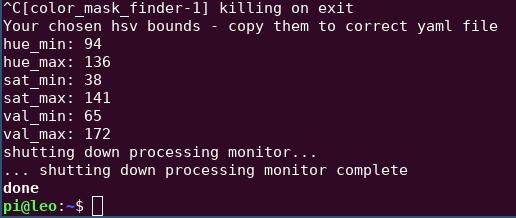

When you are satisfied with your color mask, you can stop both rqt and the node (with ctrl +c). Your chosen values will be printed in the terminal.

You need to save them in the yaml file (best if you place it in the config directory of the leo_example_line_follower package). You can do this with nano. Copy the printed values (using the mouse or ctrl + shift + c) and type on the rover

Then, paste the values (ctrl + shift + v or use the mouse), save the file (ctrl + o) and close it (ctrl + x).

Running the model is really simple. Just put the rover on the track, and write in terminal (on the rover)

But there are a few roslaunch arguments provided to run the model with your data (e.g. color mask)

The most important ones are as follows:

Every argument is documented, you can see all arguments and the documentation by running

So, an example command could look like this:

For gathering the data, you'll need to run our node data_saver.py. You need to run it on the rover by using the roslaunch command.

The node has one required argument – duration which specifies how long (in seconds) the data will be recorded. You can also specify the output directory for the recorded data using the output_dir argument. So, for example, your command can look like this:

This will record data for 30 seconds and place all the recorded data in the test_drive directory (the node will create the directory if it doesn't exist).

First, the node waits for twist messages from /cmd_vel topic, and after it gets any message from this topic, it'll start recording data (the only data that will be recorded is when the rover is moving – if you're staying in place, no data will be recorded).

After recording the data, in your output directory, you'll find images saved from the rover, and one file labels.txt. The file contains multiple lines of format img_name:label, where the label is a tuple of two floats representing linear and angular (respectively) speeds of the rover in the situation visible in the specified image.

When you have your data recorded, you have to collect it in the correct structure. To do so, you need to run our prepare_data.py node with the rosrun command.

The node has three flags which you have to specify:

So, for example, your command can look like this (if you're running it in the home directory):

After running this node, in the same directory, you'll have your zip file with the processed data that is ready to upload to your notebook.

Having your data ready, you need to upload it to your notebook. You get a copy of our notebook when cloning repository, but you can also get it under this link.

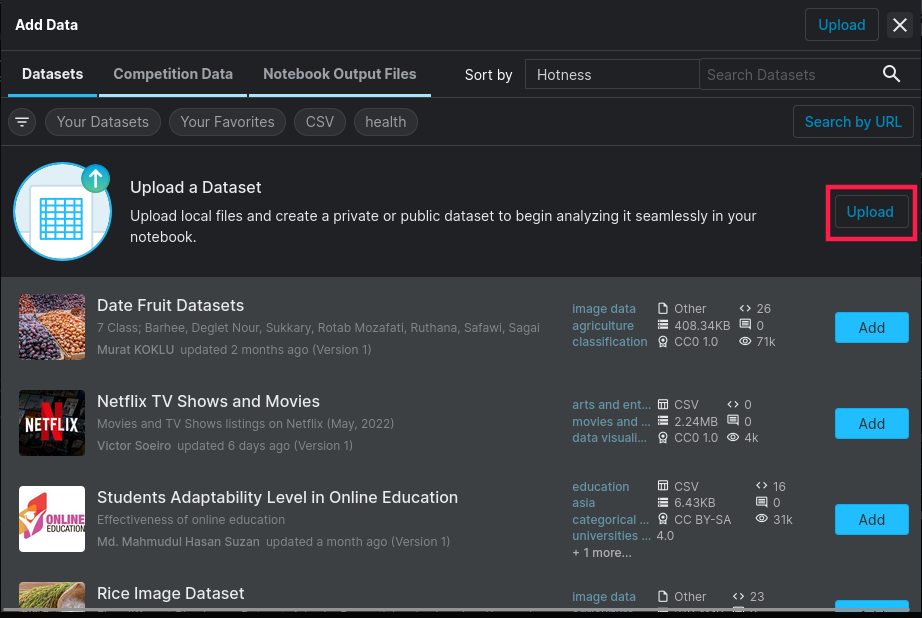

Once you have the notebook, you can upload the data using the Add data button in the upper-right corner.

Then, just click on the Upload button, and drag your zip file (you also need to provide a name for the dataset).

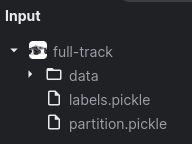

Once it's uploaded to the notebook, you should see something like this:

Now, you just have to run all the cells up to the "Custom tests" section to begin the training.

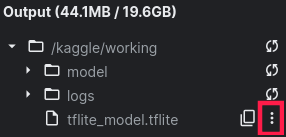

When the training is finished, you'll see your tflite model (the name may differ if you've changed the correct variable in the correct section) in the output section.

The only thing that you need now is to download the file and place it on the rover. You can download the model by clicking the three dots that show up when you go with the cursor over the file.

Then, just press Download and the model will be downloaded. Now, you have to place it on the rover, you can follow the instructions from this tutorial.