An object detection example for stock Leo Rover

An object detection example for stock Leo Rover

In this example, we will show you how to run Object Detection on the Leo Rover mobile robot.

After completing this tutorial, your rover should be able to recognize 91 objects from the COCO dataset (listed here), and display an image with drawn bounding boxes around the detected objects.

For the object detection, we use models prepared by TensorFlow and converted to TensorFlow Lite, so you need it on the rover too.

You can install the package using apt by typing on the rover

Then you just need to source the ros workspace

You can also get all the needed software from our leo_examples github repository. You need to clone it on the rover in the ros workspace directory (if there's no such a directory, then, first go through the ROS Development tutorial):

Now, you need to install all the dependencies for the downloaded packages:

Then, you need to source the directory and build the packages:

Running the object detection node is very simple. First, you need to connect to the rover via ssh (tutorial in prerequisites). Once you are logged in to the rover, you can launch the node using roslaunch command:

The given launch file has a few arguments:

So, with some arguments your line can look like this:

Now, you need to display the output of the model. As the connection via ssh doesn't allow you to run GUI the applications (unless you run ssh with -X flag), you will need to allow your computer to run ROS nodes with master being on the rover. To do so, you need to go to ROS workspace on your computer, source the workspace, and export some ROS environment variables:

Once you have done this, you can run rqt on your computer:

There, you need to run two things:

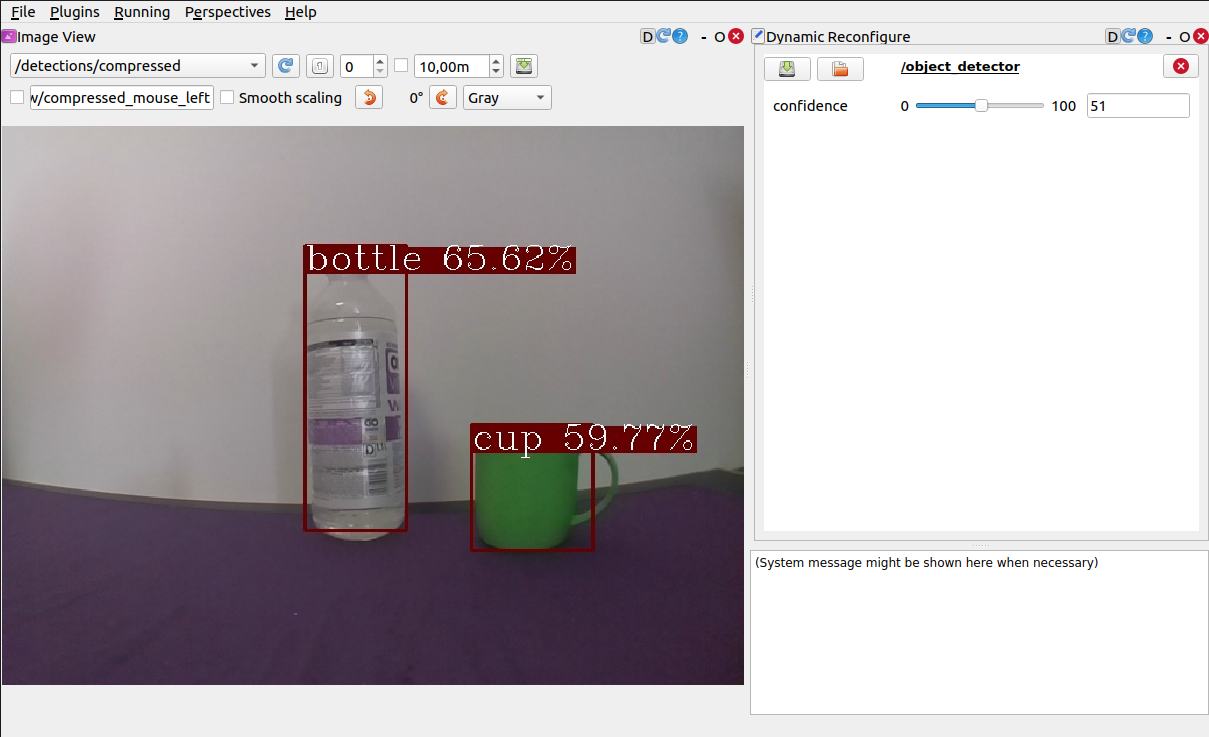

In Image View, from the topic drop down choose /detections/compressed – this is the processed image with drawn detections on it.

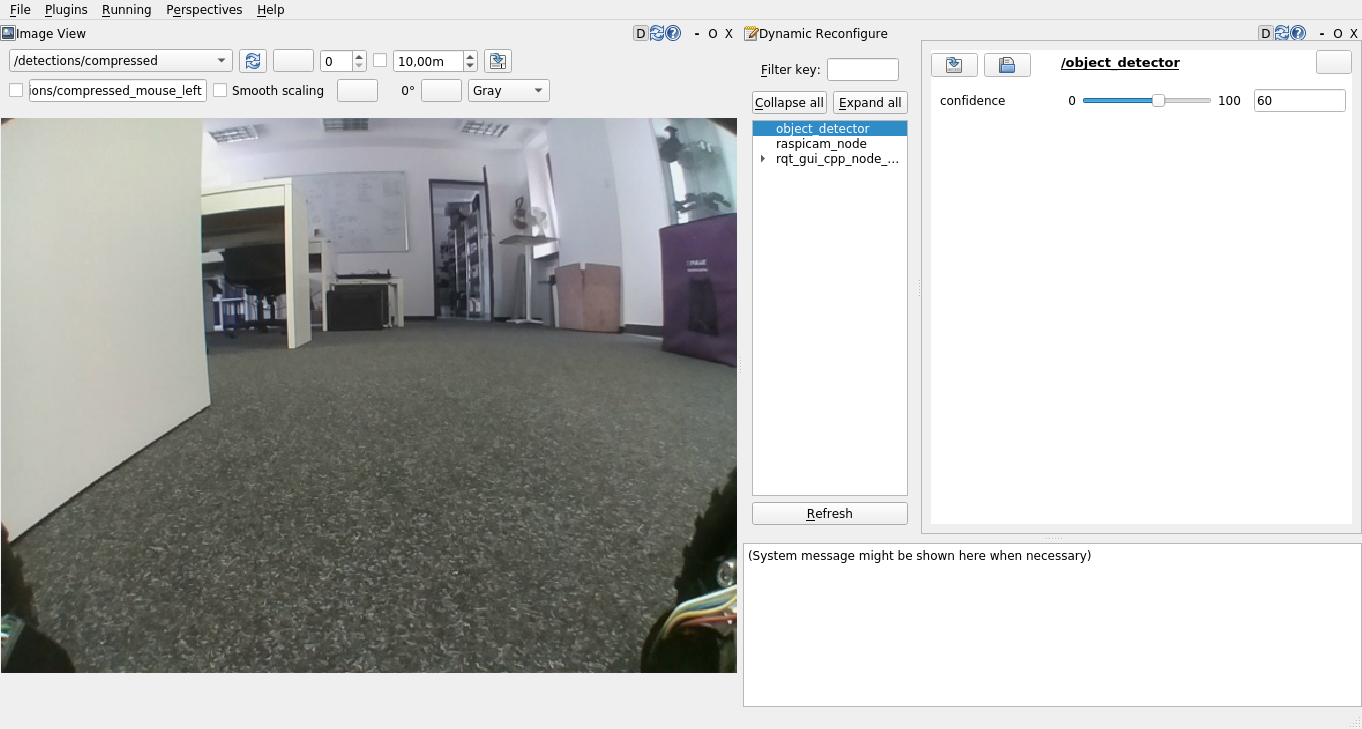

In Dynamic Reconfigure, choose object_detector. You should see something like this:

On the right side (in Dynamic Reconfigure), you can see a slider. It's a slider for the confidence parameter – it specifies the confidence threshold for the neural network guesses (only the detections with confidence higher than the specified will be drawn). You can change the value to see how the detections change.

Place objects inside the view of the camera and if they are a part of the dataset, and the algorithm if confident enough that they are what they appear to be what they really are, boxes around the item, and a text description will appear.

It's possible to run the node with your models (either made from scratch or found on the internet). To launch the node with your files, you have the launch arguments (explained above). You can specify their values to make the node use your files.

After completing this tutorial, you can try other examples from the leo_examples repository (line follower and follow ARTag), or try other integration from our site.